So Your Web Host Went Out of Business: What’s Next?

April 15, 2014

Demand for Data Centers on the Rise, but Roles Will Change

April 17, 2014Red Hat, the leaders in powering the projects that smash particles together…

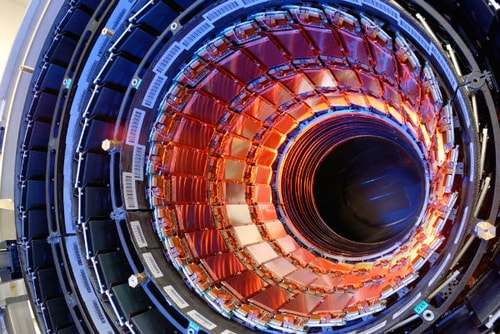

A lot of times we talk about mission-critical operations that data centers have to take care of. The operating system that operates those mission-critical applications needs to be able to handle the software and everything that goes along with it. For example, the European Organization for Nuclear Research a.k.a. CERN a.k.a. the people behind the Large Hadron Collider have begun using Red Hat Enterprise Linux (RHEL) on their servers that take care of mission-critical applications.

Red Hat and the Large Hadron Collider

If you aren’t aware of what the Large Hadron Collider is and what the lovely people at CERN do, they are on a mission to recreate the Big Bang by firing atoms and molecules at each other at incredibly high rates of speed and then they record their findings. It’s pretty awesome and incredibly terrifying at the same time.

Anyways, they just started using Red Hat Enterprise Linux, virtualization and account management on their 600 servers that run critical applications. What did you do today? Red Hat is just running the servers that are trying to recreate the Big Bang–no big deal.

According to a press release, the RHEL operating system, ”…runs some of the organization’s most critical applications, including the Large Hadron Collider Logging Server and the central financial and HR systems for CERN’s members of personnel and 11,000 users. Given the nature of these applications, operating system stability is crucial to successful operations, a need fulfilled by the reliability and high availability offered by Red Hat Enterprise Linux.”

I think the most telling thing is the “Given the nature of these applications…,” part, and here’s why: Every time they run the Large Hadron Collider—shooting particles at high speeds, like really high speeds– it requires so much power that literally everything else shuts down, including their data center that logs the information.

Here’s a breakdown of what they’re doing: “The infrastructure is comprised of physical two-socket servers and is virtualized using Red Hat Enterprise Virtualization on Dell PowerEdge M610 servers (with Intel Nehalem or Ivy Bridge processors with between 96 GB and 256 GB RAM per server) and a Brocade FC8 SAN with a NetApp data storage system. The infrastructure is designed for high availability and is built to be completely redundant…”. Sounds fancy!

Essentially, CERN needed something that has high availability and reliability due to the data loss that occurs with each outage during particle acceleration, as well as being able to handle and access all the data and information acquired. CERN is also running Red Hat Enterprise Virtualization to operate its LHCb experiment, which helps detect the difference between matter and antimatter, as the OS can easily handle the functions for millions of processes and systems that go along with the project.

Other OSes need to step up their game. Windows Enterprise, I’m looking your way…get us to Mars!

[Red Hat, Data Center Dynamics]

For more information contact Chris L.