How Face Verification Could Reduce Data Breaches?

November 13, 2019

Cyber Security Budget: From Lowest to Highest

November 19, 2019Nowadays, technology is strongly based on servers, server clusters, and such data-storing infrastructure. Even this article that you are reading is most probably stored in a big data center somewhere on this planet.

Be it a classic type of storage, using a dedicated server or cloud storage, large pieces of information need to be stored so you can use them over and over again and have access to it whenever you ought to.

The Status Quo of Data Storage

Giant data centers, covering thousands of square meters, are being built by companies such as Google, Amazon, Yahoo, and other data-storage providers. Apart from covering large sizes of land, data centers are accounting for approximately 1% of the world’s electricity consumption.

Even though large companies, such as Google, are trying to focus on using solely renewable energy, these large scale data clusters still use vast amounts of energy.

Focusing solely on using renewable energy is not an option. Another aspect the companies should take into consideration is trying to reduce the overall power consumption of their data clusters.

Classic Data Centers

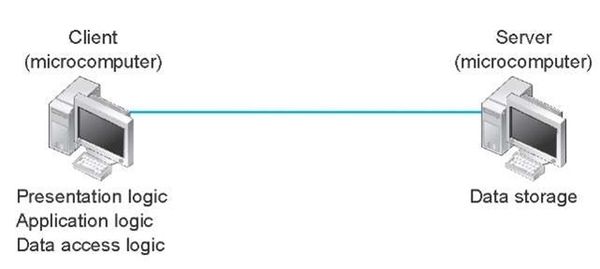

A classic data center infrastructure, in terms of server-side computing, is focused on two critical components: Data Access Logic and Data Storage.

Data Access Logic

Credit: In Depth Tutorials and Information

This essential part of a data center’s infrastructure focuses on the way information is received from the user, the way it is stored, and where it is stored.

If this layer could talk, whenever you want to save something, he would say something like this: “Ok, The user has saved another article on his website, and he sent it to me. The first thing I do I will transform the entire information into a JSON format, then I will compress it and store it on hard drive X.”

Data Storage

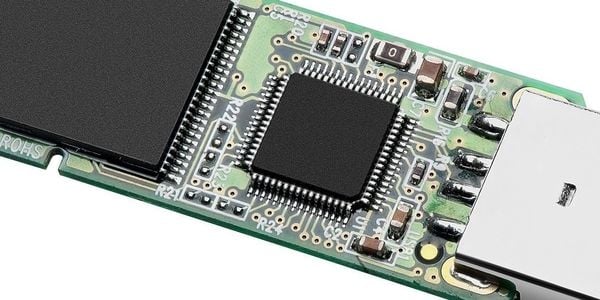

Unlike its business partner, the data access logic, this layer of the infrastructure is composed of Solid State Drives, which are hard drives, and they are used to store the data you send to them.

Highly-Efficient Flash Storages vs. Outdated Data Processors

Some parts, along the data storage funnel, such as Data Storage, have improved during the past few years. For example, the Data Storage layer has seen a significant improvement since the Solid State Drive has been implemented.

Credit: SearchStorage – TechTarget

Unlike its older brother, the Hard Disk Drive, its speed has improved a lot. For example, the writing speed of a regular HDD will be of 150 megabytes per second, if used at full capacity. Meanwhile, its younger brother, the SSD, will max out more than 500 megabytes per second.

If you’d like to save a 1 GB movie on your computer, by using an HDD, it would take around 6 to 7 seconds. The entire operation could be done in less than 3 seconds if an SSD would be used. That’s a lot faster.

Now, the speed of the Data Storage layer has improved drastically, but what about the Data Access Logic? Is it keeping pace?

Well, during the past decade, the CPUs have improved by 60 times, this means that if you had to save that movie a decade ago, you’d have fallen asleep while doing it. But, even so, their processing speed only increased by 1.5 to 2 times during the past 5 years. Is this fast enough considering that the apparition of SSDs improved the Data Storage layer’s speed by up to five times?

These discrepancies between CPU improvements and the ones of the HDDs and SDDs have created a bottleneck for the data centers. Recently, MIT researchers have come up with a technology that could help eliminate this bottleneck and increase the efficiency of our data centers.

Apart from performances, such as higher Input and Output speeds, this technology could also reduce a server’s power consumption and physical size by a half.

The New Data Storage Solution

During the past few years, researchers from the Massachusetts Institute of Technology have been trying to come up with different technologies to reduce the overall power consumption of data storage units and, of course, their physical size.

Credit: Odinate Technologies

One of the best solutions they found is called Light Store and, considering the performances it has, it will revolutionize the way data centers are being built and the technology they use.

Light Store vs. Classic Data Storage

Regarding the Data Storage layer, the infrastructure remains basically the same, with small changes in terms of the roles this drives will. The Solid State Drives will include a tiny portion of the business logic.

The most significant breakthrough of the Light Store technology is in the Data Access Logic.

Out with the Middleware!

The MIT Researchers propose to remove the power-hungry middleware, such as Data Access Logic. In today’s data centers are accountable for more than 40% of the overall electricity consumption. Once these microchips are removed, the plan is to directly connect the Solid State Drives to the data center’s network. Then, the processing of the information coming or going from and to the user will be managed by the drive.

An essential aspect that should be highlighted is the fact that data manipulation is being simplified using key-value strings. Today, data centers are using very complex objects to send and receive data. Objects which consume lots of resources to be processed.

Light Store Benefits

According to the researchers, the main benefit of this type of infrastructure is the fact that it will reduce the overall power consumption and physical size of the data storage units. Basically, if all data centers would use this technology, their power consumption would drop from 1% of the entire planet’s electricity to 0.5%, which is a significant improvement.

How does this technology achieve this? Well, they remove a part of the data processing unit, and they assign their tasks to the data layer itself.

Think of this process like this: Let’s say you are a native English speaker and you are trying to talk to a foreigner. If he doesn’t speak your language, you would need a translator, and the information would go like this: you, translator, the foreigner. This is our status quo.

Now, if the Light Store would be applied to real-life translations as well, the technology would remove the translator and would teach your buddy to speak English. This simplifies the entire process and reduces energy consumption.

Data storage facilities are the Achilles’ heel for today’s technology. It is useless having an internet connection if you cannot access any data. This is the internet’s value, fast information exchange between peers.

That’s why constant improvements to the data management systems are highly important. Another crucial aspect that should be taken into consideration when improving this technology is its footprint: physical space used and energy consumption.

Light Storage is a very innovative invention the MIT researchers have come across. It can quickly reduce the space needed for the data centers. Most importantly, if companies such as Google, Amazon, and cloud providers will implement this technology, its footprint, in terms of energy consumption, will be cut in half. All in all, these data centers will not use 1% of the entire planet’s electricity, but they will use 0.5%. From an environmental point of view, that’s a considerable improvement.

Main Photo Credit: Channel Futures

1 Comment

Good news