Did You Know Data Centers Can Protect Your Data From EMPs?

September 15, 2014

What Are Data Centers Testing to Reduce Electricity Costs?

September 18, 2014Just when you thought those nerds at MIT had quieted down, they’re back at it. This time with FaceBook!

But what they’re developing could change the way you “internet.”

Alright, I’ll just get to it: they’re eliminating data center latency. And that’s no hyperbole, that’s true elimination.

Finally those jerks on the internet road will be policed by a stronger, smarter, better looking traffic cop named “Fastpass.”

What do I mean? Let’s take a closer look.

What Causes Data Center Latency in The First Place?

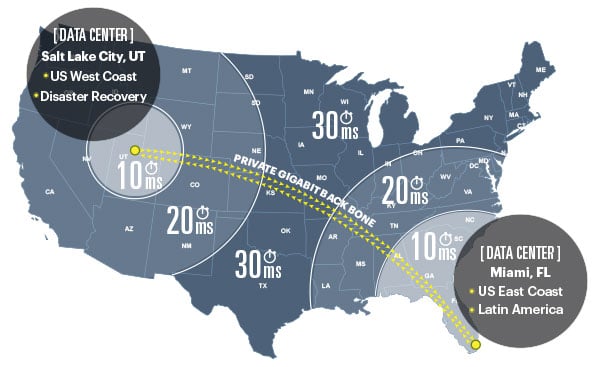

Let’s imagine you’re in Texas but the site you want to access is headquartered in a Los Angeles data center. That data has to travel along a shared wire for several states before it pops up on your screen. And it’s not just one extremely long wire and it’s certainly not in a straight line, right?

Wherever that wire bends there’s more than likely an intersection of perpendicular wires and all data is queued up at these intersections and allowed to pass through in a process called “packet switching.” These MIT and Facebook researchers say that these queues are the main cause of latency. And they mean to fix that. Through science.

To quote the researchers:

“We propose that each sender should delegate control — to a centralized arbiter — of when each packet should be transmitted and what path it should follow.”

How a Centralized Arbiter Can Eliminate Data Center Latency

And this is where Fastpass comes in sporting his new blues and spotless white gloves, badge gleaming in the light of the internet—queues be damned!

Before we get into the nitty-gritty, let’s look at some stats.

Last year the researchers experimented with Fastpass on a FaceBook server and it produced a 99.6 percent reduction of average router queue latency. The test occurred at the heaviest of traffic times for the social media powerhouse and delays dropped from 3.56 milliseconds to a measly 0.23 milliseconds from when the data was requested to when it arrived. Daaaang that’s fast.

Another quote from researchers about Fastpass:

“Not only will persistent congestion be eliminated, but packet latencies will not rise and fall, queues will never vary in size, tail latencies will remain small, and packets will never be dropped due to buffer overflow.”

This is the type of innovation that completely changes the game.

What Makes Fastpass Different?

Surprisingly, Fastpass ignores the rules most Networking 101 classes preach to their pupils on day one: centralized network controllers are bad. They bottleneck data flow and allow for a single failure point.

But, of course, the researchers found a way to streamline the process, taking out any possibilities of bottlenecks or singular failure points.

And how does it do that?

Fastpass works by analyzing data into two factors: Timeslot Allocation & Path Selection.

For Timeslot Allocation, Fastpass assigns the sender a set of timeslots. Then, it keeps track of the source destination assigned to a particular timeslot.

The arbiter then chooses a path through the network to communicate the data to the requested source. Boom. Done.

The reception of Fastpass has been great so far, but it’s still in its early stages. Stay with us for all the updates as the story continues.

H/t: techrepublic.com