Cybersecurity in the Era of Data Science

May 26, 2020

How Remote Management Tools Help Colocation Customers

May 28, 2020Data Centers are necessary for major tech companies and technology as a whole- but historically have power hogs; it is estimated that up to 3% of all U.S. electricity powers data centers. This is a huge amount of electricity!

Thankfully, we live in a generation where engineers are developing more energy and cost-efficient ways to build and maintain these massive facilities, to where they can become a little bit more self-sufficient, especially in the green-power mindset that is prevalent today.

Data Centers Historically

Back in the 1940s, the ENIAC (Electronic Numerical Integrator and Computer) was one of the first data centers built. Before Computers were built with a compact RAM system and more simple wiring, the ENIAC ballooned its space with 18 thousand vacuum tubes and utilized capacitors and diodes. As time went on, engineers developed a greater understanding of what materials they could better use to minimize the massive space these old computers required. IBM led the way with the integration of virtual technology and eventually the normalization of the supercomputer in data center use.

Putting It into Perspective

Google estimates that its search engine produces 5.6 million queries a day on average. This massive amount of data would overwhelm data systems even 15 years ago, but google’s numerous data centers have the server power to handle this type of internet usage. However, a study done by Yale estimates that “a typical search using its services requires as much energy as illuminating a 60-watt light bulb for 17 seconds and typically is responsible for emitting 0.2 grams of CO2.

Which doesn’t sound a lot until you begin to think about how many searches you might make in a year.” Doing simple math shows us that that averages to about 2.04 billion searches a year and 408 billion grams of CO2.

Although we think of electrical emissions as minimal compared to other fossil fuel emissions, the sheer quantity of simple emissions made by such huge uses of power is noticeable in the grand scheme of things.

If we estimate the average data center kilowatt-hour production in a single year, it would be equal to 34 large coal power plants producing 500 MW each in order to manage this kind of demand. Although these emissions are less apparent, they are no less harmful to the environment.

How Do Data Centers Utilize Green Power?

A 2005 Uptime Institute report determined that many data centers were so badly organized that only 40 percent of cold air for service racks actually reached them despite the fact that the facilities had installed 2.6 times as much cooling capacity as they needed. Since then, however, data center tech has come far, with the introduction of solid-state drives (an integrated storage device that uses internal wiring) rather than bulky hard drives.

Energy efficiency has seen an explosion of development in many industries, including data centers. Certain data management practices have become more effective, and many servers are using server virtualization and automation, making the server systems more automated and self-sufficient.

Hyperscale automation has taken the forefront in data center development, with hyper-scale data centers being constructed in the most efficient way possible. Dividing the server blocks into different areas with dedicated cooling technologies, and comically enough, open space, help the server components to cool more effectively and maintain a moderate internal temperature within the data center itself.

Developers have also discovered that many unused or dead servers take up important space within a server system and use a substantial amount of energy; thus, getting rid of dead or unused servers, or simply designing a latent functionality mode when these servers weren’t producing like normal, started a trend of DC optimization.

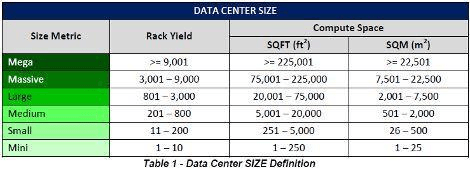

Above is a graphic that shows how much computing space can be achieved from hyper-scale Data Centers at different sizes. Many hyper-scale data centers that are built can power servers from 25-50 data spaces, while still maintaining climatic integrity within the system.

Tech Knows: How Companies Are Making the Change

Google, unsurprisingly, is the leading lady of green tech companies. Normalization of cloud technology, and google’s own dedicated cloud, have made it easier for developers to build out the Google Suite in the most energy effective way. Google has optimized its CMS and SAAS software in such a simple way that the GSA released a study showing that the average business can reduce carbon emissions using the Google Suite from 60 up to 90%.

The Google devs also introduce an interesting point; many large companies expend an equal amount of energy on overhead cooling as they do on their actual system. Seeing this as a major flaw to the effectiveness of a system, Google has optimized its Green Grid standard PUE to a whopping 1.11; this is done by building cooling modules underground within the data center and having natural gas power certain services.

Other large companies also value the eco-friendly image and functionality of a green data center, and many, such as Facebook, eBay, Apple, and Equinix AM3, have made the switch to hyper-scale data centers.

The timely development of cloud technology as well as an increased understanding of data center design has combined for a great future in green data centers. The industry-standard measure of power effectivity, known as a PUE, is a relevant statistic that many large companies use for frame of reference in developing a fast and functional database.

With the leading companies heading out the effort, many other smaller companies will follow suit in their data centers. With the future of tech lying on the horizon, increases in optimization and effectiveness will further perfect the internet and data storage as we know it. Only the sky (and the cloud) is the limit.