International Women’s Day: Top 5 Women in the Data Center Industry 2016

March 5, 2016

What Are the Possibilities of Big Data Storage?

March 14, 2016Editor’s note: This article was updated on 6/21/2016 at 1:00pm PST. The update can be found at the end of the article.

It’s the middle of the week, and Hump Day is in full effect. Coffee definitely isn’t working, so you need a little pick-me-up—why not take a quick trip down Nostalgia Lane?

This week we’re taking a look at the leaps and bounds of supercomputing! From giant monoliths in a room to a powerful processor in your pocket, the supercomputer has had some drastic overhauls over the years, but one thing remains the same: POWER!

The 1960s

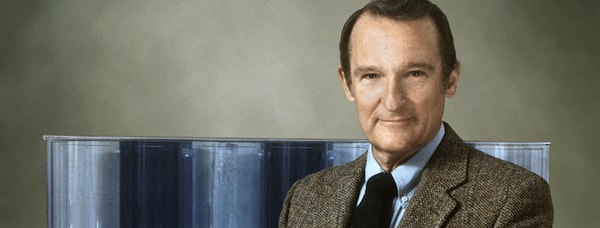

The first supercomputer was released in 1964; the Control Data Corporation (CDC) 6600 was designed by Seymour Cray—known as the “Godfather of Supercomputers” (a name I totally made up now)—who would go on to design a lot of the first supercomputers in the world. The 6600 was relatively small for its processing power, as it was about four filing cabinets big, despite only have a single CPU. One CPU, four filing cabinets to hold it. Think about that.

The 6600 was also “mass produced”, as over 100 models were sold to power various nuclear-bomb labs to analyze the data, with the very first one being delivered to the CERN Laboratory in Geneva, Switzerland in 1965.

Seymour Cray would leave CDC in 1968 and form his own company, appropriately titled Cray Research.

The 1970s

And Cray Research would begin bringing that processing HEAT. Cray would release the Cray 1 in 1976 with the first unit being delivered to the Los Alamos National Laboratory to model nuclear weapons. The Cray 1 was a 64-bit system running at 80 MHz with 8 megabytes of RAM (woah, slow down!) and if you aren’t aware, that is less than the iPhone currently sitting in your pocket. Your iPhone could technically make models of nuclear weapons (I bet there’s an app for that).

The Cray 1 was designed to look like an American Gladiators obstacle—just kidding, it was designed in its iconic horseshoe shape to lessen the distance of wires on the inside of the curve to achieve that 80 MHz processing speed. But I wouldn’t be surprised if it was also used to hide behind while Nitro shot tennis balls at you from a cannon.

The 1980s

Seymour Cray and his cronies continued to dominate the supercomputer market into the 1980’s, when they released the Cray 2 in 1985. Keeping the distinctive horseshoe shape, while placing the cooling mechanism off to the side as a separate part, the Cray 2 clocked in with a massive 2GB RAM and was the fastest supercomputer in the world for a short time.

Photo Credit: cray.com

It was also the first to use an operating system, as it ran on a Unix OS, allowing it to gain a bit of mainstream appeal in universities and corporations that needed the processing power.

The 1990s and Beyond

Things started really heating up in the 90s, as the Japanese finally got sick and tired of watching us create super-fast computers and got into the game themselves. In the early 90s, the title of “fastest computer in the world” changed more than my underwear after burrito day. The NEC SX-3, Fujitsu Numerical Wind Tunnel, and Hitachi SR2201 all burst onto the scene, claiming the title within years of each other. The SR2201 brought the power with 2048 processors and a performance peak of 600 gigaflops*

*FLOPS is an abbreviation for floating-point operations per second, which is used to measure processing power in supercomputers. 600 gigaflops is about 600,000,000,000 operations per second. The Cray 2, for example, peaked at 1.9 gigaflops. So…yeah.

In 1997, Intel partnered with Sandia Labs to create the ASCI Red—a supercomputer modeled after the Intel Paragon—that broke the gigaflop barrier with a peak performance of 1.3 teraflops. It also came equipped with 1212 GB of RAM, and took up 1600 sq ft in total. Sometimes you have to sacrifice size for PURE SPEED. The ASCI Red was widely considered one of the most reliable supercomputers ever built, and is still considered a watermark today. After the 1992 moratorium on nuclear testing, the ASCI Red was used to simulate nuclear explosions

Photo Credit: top500.org

The ASCI Red stood on top of the supercomputing list until 2000, when the ASCI White was released. The ASCI White was a machine built by IBM that housed 6 terabytes of RAM, 8,192 POWER3 processors, and a peak performance of seven teraflops.

The Japanese wouldn’t be outdone, though. In 2002, they released the 35-teraflop NEC Earth Simulator supercomputer, which—based on its name—I assume just simulates everything about Earth. Unfortunately, it actually just runs global weather models to evaluate global warming effects and to track geophysics. With 5,120 processors, 10 terabytes of RAM and 700 terabytes of storage, the least it could do is run The Sims but only real-er.

Over the next few years, the processors and parts would get smaller, but that only means they can fit more processors into huge spaces. The title of “world’s fastest computer” has changed multiple times since the NEC Earth Simulator, with the current top spot going to China’s Tianhe-2 supercomputer, which roughly translated means “Milky Way 2.”

Photo Credit: popsci.com

The Tianhe-2 boasts a peak performance of 33.86-petaflops, backed by 1,375 TiB (Tebibyte) memory, 12.4 PB storage, and a whole boatload of processors. It has been the world’s fastest computer since 2013, and there seems to be no signs of stopping it from taking the top spot again for 2016.

What’s it used for? Weeeeeeeell, China won’t officially say, which totally isn’t scary or anything.

However, President Obama isn’t going to stand by and let the Chinese be the supercomputing rulers of the world that easily. In 2015, he created the National Strategic Computing Initiative which will be researching how to create the first 100 petaflops. USA! USA! USA!

UPDATE: Well, we lost. USA! US… I can’t do it anymore….

We hope you enjoyed this trip down Memory Lane!