How AI Is Helping Us Fight the Coronavirus Epidemic

April 29, 2020

How to Choose a Data Center

May 5, 2020Although it now seems as if smartphones have always been a part of our life, the first Apple iPhone was released over a decade ago at the back end of 2007. This device revolutionized mobile phone technology and drastically changed how phone developers look at cellphone design. Since then, all other major mobile phone manufacturers have developed their smartphones inspired by the initial vision that was the catalyst for the iPhone 1. https://www.youtube.com/embed/4ryQTkDWmBgSiri, a natural language virtual assistant application, has become synonymous with Apple phones and their iOS system. What many people do not know is that Siri was not always under the umbrella of Apple. Before the release of the iPhone 4S, the first phone to include Siri, the developers behind the virtual assistant program were making plans to release a version for Android and the now-defunct BlackBerry OS. Before this could happen, Apple acquired Siri and it became proprietary technology under the Apple banner. Apple subsequently removed Siri from the app store.

Since it was first rolled out on the iPhone 4S, Siri has gone through a great deal of development. The initial launch of the software allowed users to make basic voice commands, such as “call Karen”, and “set dentist appointment reminder for March 5”. Since then, the application has grown to include a much greater range of functions and has recently been incorporated with Apple’s smart home technology. This has caused Siri to become heavily integrated with Apple’s data center.

Apple Data Center

Most people, and rightly so, see Apple as a technology company. They have been heavily engaged in the development and production of new technologies and computer hardware parts, mostly for home computers and mobile phones. Aside from this, Apple needs to deal with a great deal of data. This is especially true since the release of their iCloud service. On top of this, Siri’s continued integration has required a great deal of back-end data and processes for the service to work.

Apple has massive data centers on both coasts of the mainland United States as well as a number all across the world. These data centers host rows upon rows on=fservers which feed and receive data. Without these data centers Apples, a large catalog of services would not be possible.

It is important to understand that Siri, unlike Google’s AI-driven assistant, performs tasks on the server-side, not the client-side. Google’s AI helper performs the initial voice recognition processes on the device itself before pulling information from the server, Siri performs nearly all tasks on the server. In the past years, this caused Siri’s response time to lag behind its competitor somewhat. In recent years Apple has made adjustments to the design and architecture of Siri and how it interacts with the server. This has greatly increased query speeds.

How Siri Works

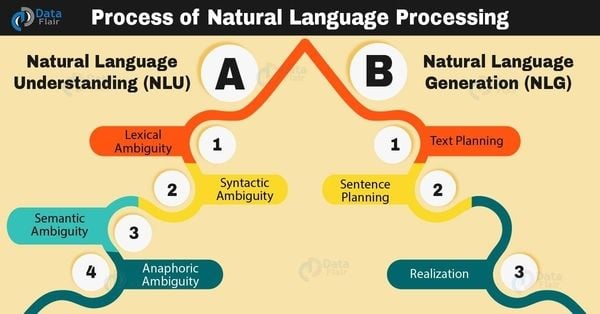

Siri works by using two primary technologies, natural language processing, and speech recognition.

Natural Language Processing

NLP is a branch of computer science that deals with AI programs whose function is to understand and interpret human language. Computers operate on machine code, a binary system that essentially tells processors what state they should be in. Machine code, although designed by humans, is very difficult for humans to understand and manipulate.

The rise of computer programming languages, such as Java, C++, C#, and others gave humans an intelligible way to interact with machine code and write instructions for how a computer is to interpret and manipulate information at its base level.

In regards to Natural Language Processing, there needs to be a system for taking human speech and breaking it down into information bits that computers can understand. This process is what Natural Language Processing refers to.

NLP breaks down into two main categories, syntax and semantics. Syntax refers to the structure of sentences. It focuses on the rules of grammar and sentence structure. Semantics, on the other hand, deals with the meaning of the words themselves. What is the person trying to say when they say “Siri, set my alarm for 7 am”. In regards to back-end NLP architecture, there are several items the software looks at.

Grammar

NLP software uses the grammatical rules of languages to help it determine the basic structure. It takes a voice statement and converts it into a text form.

Parsing

In most languages, the grammatical structure can at times be ambiguous which can result in many different interpretations. A common example of this in English is comma placement. Where a comma is placed can dramatically alter the meaning of a sentence. Parsing is a process in which the NLP software analyses the text and determines what is the most likely grammatical structure in cases where ambiguity may lead to an incorrect text representation.

Lexical Semantics

The NLP software is greatly concerned with determining what is the most likely meaning of a word in a given.

Word Sense Disambiguation

Many words have multiple meanings depending on the context and other words while sounding the same, are spelled differently and refer to different things. NLP software tries to reduce ambiguity and determine which is the most appropriate word given the context.

Speech Recognition

The field of machine speech recognition is not new. It has been around for several decades and is extremely interdisciplinary in nature. As one may guess, the main objective of speech recognition is to allow the software to recognize and internalize human speech. When someone starts Siri by saying “Hey Siri” the voice recognition software kicks in. It records whatever command is being given and then passes the recorded speech to programs that deal with natural language processing.

Once these two steps have been completed, Siri then performs whatever task it has been asked to do. This could be anything from playing music to setting an appointment, to answering a basic question.

Advancements in the fields of speech recognition and natural language processing have reduced Siri’s error rate to below 10%. While there is still room for improvement, it can still be seen as a major accomplishment. Having a computer understand the intricacies of human communication is no easy task.

Main Photo Credit: Apple