Is Geothermal Energy the Future?

October 26, 2022

Technology News Recap

November 7, 2022Table of Contents

The current digital world we live in requires connectivity that isn’t only strong, but fast connectivity. Individuals and businesses alike are all looking for the fastest connection possible. Internet service providers offer higher bandwidth plans to curve these cravings from their users, but various aspects directly affect network speed besides bandwidth. This article discusses the various factors including latency, throughput, and of course bandwidth, and the role they play in network speed and performance.

What Is Bandwidth?

The name bandwidth is derived from the words “band” and “width.” In telecommunication, a band or frequency band is an interval in the frequency domain. Communication signals must occupy a range of frequencies. So, bandwidth is the width of the communication.

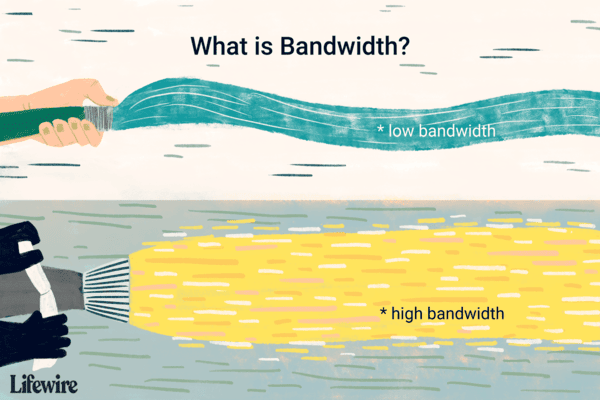

Bandwidth is the maximum amount of data that can be transmitted through an internet connection in a given amount of time. While bandwidth isn’t directly responsible for speed, it’s the available capacity. An internet connection will be able to move data faster and with more ease compared to a connection with lower bandwidth. The example that is often used is a pipe. Bandwidth can be thought of as how wide a pipe is. The larger the width of the pipe, the easier and potentially faster liquid can move through. Bandwidth works the same way. The larger the bandwidth, the easier and potentially faster data can move through.

Early bandwidth measurements used bps or bits per second, but advancements through the years have given modern networks more capacity. Network connections now measure in Mbps or megabits per second and Gbps or gigabits per second. The confusion. Some of the confusion could be between bits and bytes. There are eight bits to every byte. 25 Mbps is different than 25 (megabytes per second).

It may also be confusing that bandwidth is the only aspect that affects network speed. Although data can move through an internet connection faster with a larger bandwidth, it isn’t the only thing that affects network speed. Latency is another aspect that affects network speed.

What is Latency?

Although bandwidth is one of the main aspects that affect network speed, it isn’t the only thing. Often, users’ dissatisfaction comes from lagging or buffering, which isn’t usually caused by bandwidth but is usually an issue associated with latency, a delay in this transmission. Data or information is sent from one location and received by another. This also means that this data pack travels this distance through cables and a wireless frequency. Because of the laws of physics, data will always be limited in speed. It will never be able to travel faster than the speed of light.

Several different types of latency will create various types of lags and delays when sending and receiving data including disk latency, RAM latency, CPU latency, audio latency, and video latency.

Disk latency is a delay that happens when an information packet is requested from a physical storage device and when the data is received. Disk latency is associated with the rotational latency and seek time of physical disk hard drives. RAM latency is the number of clock cycles it takes for the module to access the intended information packet and makes it available. RAM latency is sometimes called CAS latency.

There is also CPU latency, audio latency, and video latency, which again is caused by a bottleneck that creates a delay. Audio latency is the time it takes for an audio signal to be sent and received from one location to another. Video latency is the measure of time for video to be transferred from the lens to the display.

Data center users will primarily deal with network latency. This measures how fast information is moved through various network connections through cellular and Wi-Fi signals, or fiber optic cables and copper wires.

How Can You Reduce Network Lag?

There can be latency issues when it comes to cloud services because everything is done through the typical connections mentioned earlier including cellular, Wi-Fi signals, fiber optics, and copper wires.

One solution that has improved network latency is edge computing. Traditional cloud computing relies on one single data center, but by using a wider more distributed network, the number of data packets that have to travel is cut down. Using a mixture of IoT (Internet of Things) devices and edge data centers positioned in specific areas, businesses can utilize more processing power to reduce user latency. This reduces how far information travels and can increase performance for companies and their users.

Another way to reduce latency is by utilizing colocation data centers. The main issue that edge computing solves can also be solved by employing colocation. Using a colocation service with locations in various metropolitan cities will bring the power closer to companies and their users. A colocation provider like Colocation America has strategically located data centers in all the large metropolitan cities including Los Angeles, San Francisco, Chicago, New York, New Jersey, Boston, Philadelphia, Connecticut, and Miami. These strategically located data centers can close the proximity gap for many businesses and their users reducing network latency.

Conclusion

Increasing network speed should be a priority for businesses with an online presence. . This should also be a priority for large corporations with employees in multiple locations. This can be done in a variety of ways including increasing bandwidth and decreasing latency by using edge computing or by utilizing a colocation service with strategically located data centers. Colocation data centers also have flexible bandwidth options and edge computing strategies.

Improving these aspects will allow businesses to deliver faster and improved customer service. If you are looking to increase your network speed, partnering with a colocation provider can be beneficial. Contact Colocation America to help build a strategy and solution for all of your networking business needs. Your current and future customers will be glad you did.